Architectural Foundations of Video Diffusion Models: A Comprehensive Analysis of Latent Space Representations

An in-depth exploration of the computational architectures powering modern video generation systems, examining latent space efficiency, memory optimization, and quality benchmarks.

Introduction to Latent Space Architectures

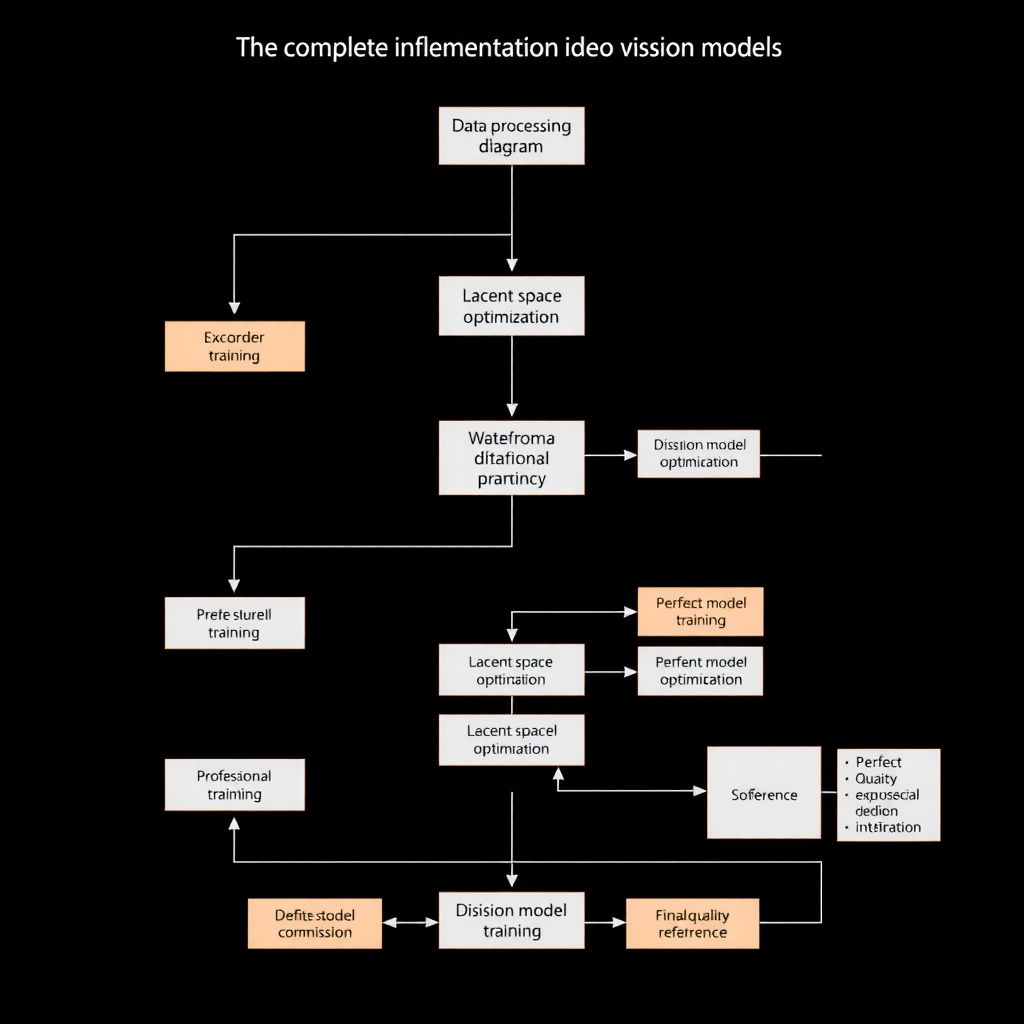

The evolution of video diffusion models has been fundamentally shaped by innovations in latent space representation. Unlike traditional pixel-space approaches that operate directly on high-dimensional video data, modern architectures leverage compressed latent representations to achieve computational efficiency while maintaining generation quality. This architectural paradigm shift has enabled the practical deployment of video generation systems that were previously computationally prohibitive.

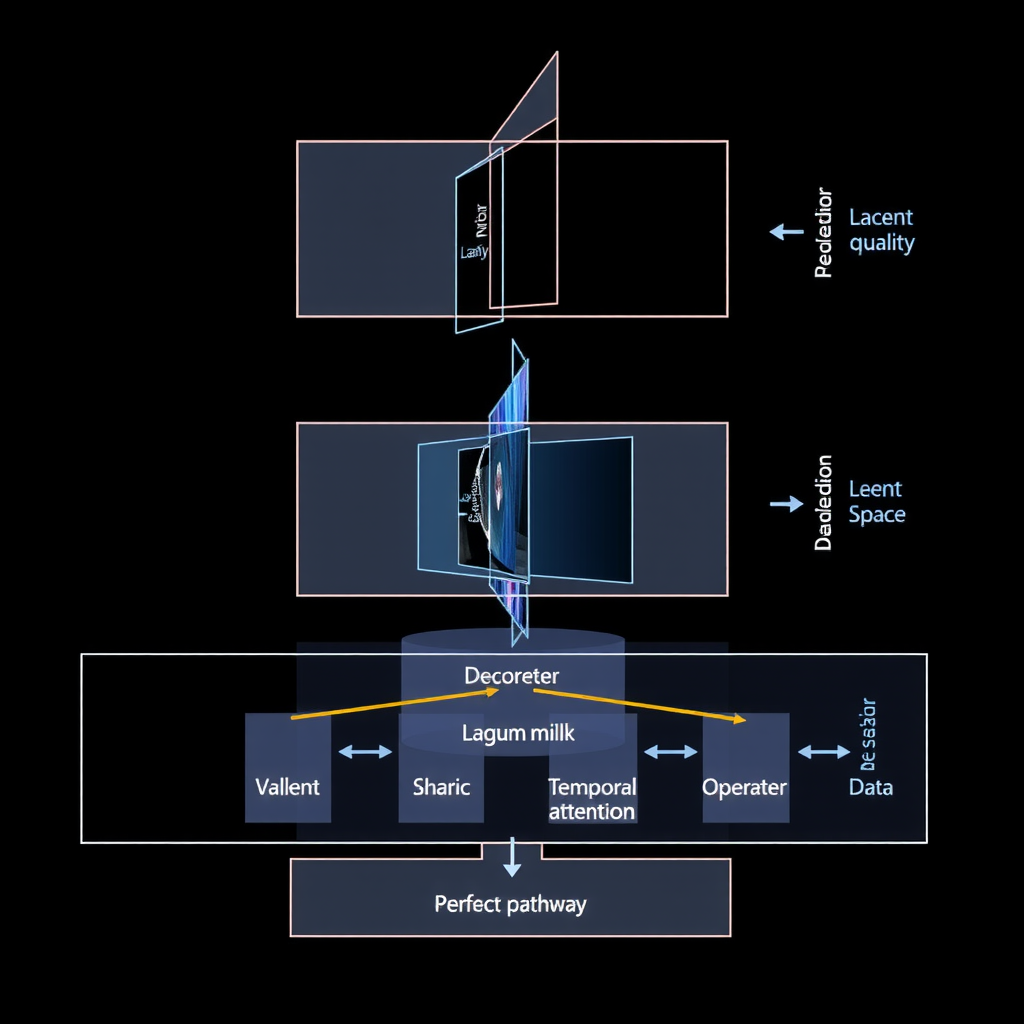

Latent space representations in video diffusion models serve as intermediate feature spaces where the diffusion process operates. These spaces are typically learned through variational autoencoders (VAEs) or other compression mechanisms that encode high-dimensional video frames into lower-dimensional latent vectors. The choice of latent space architecture directly impacts three critical performance metrics: computational efficiency, memory requirements, and output quality. Understanding these trade-offs is essential for researchers developing next-generation video generation systems.

Recent academic research has demonstrated that the dimensionality and structure of latent spaces significantly influence model behavior. Studies from leading AI research institutions have shown that carefully designed latent representations can reduce computational costs by factors of 10-100x while maintaining perceptual quality comparable to pixel-space methods. This article examines the architectural foundations underlying these advances, providing researchers with practical insights for implementation.

Comparative Analysis of Latent Space Architectures

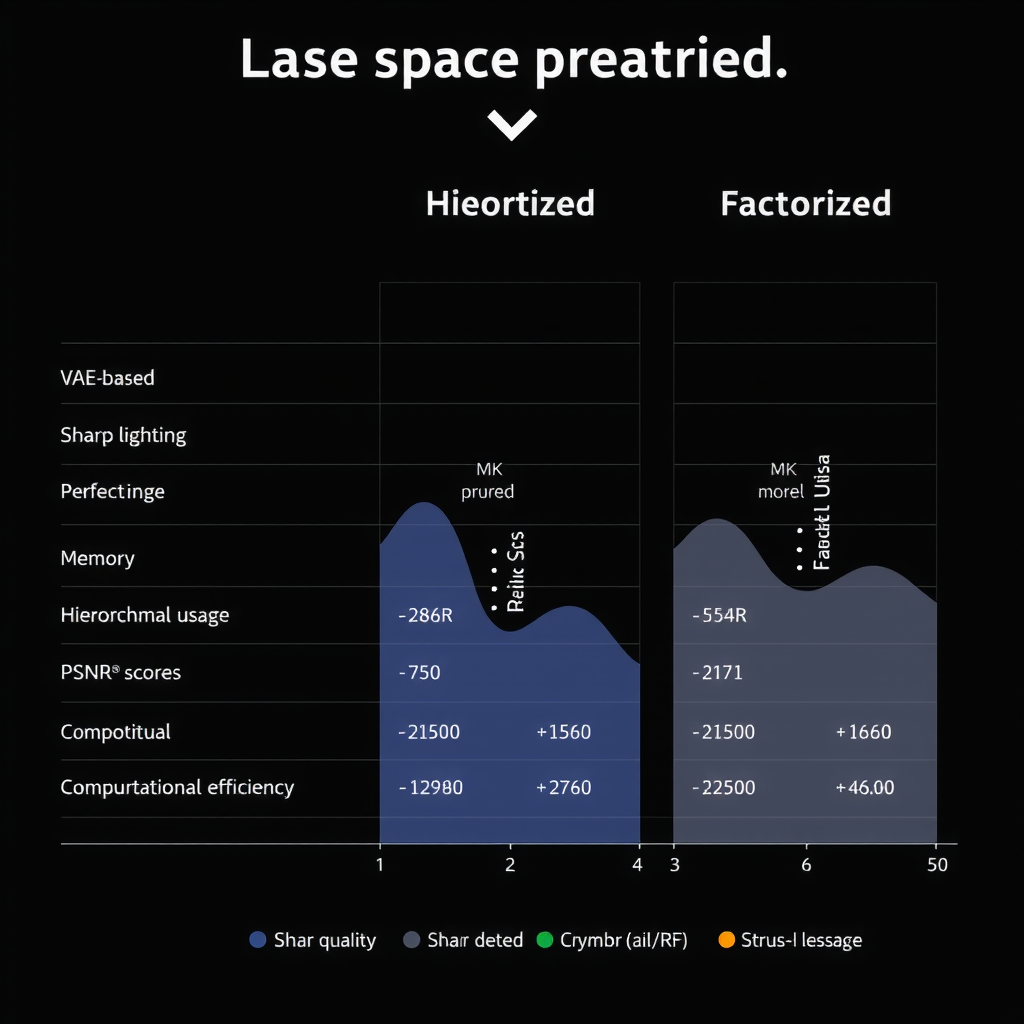

The landscape of latent space architectures for video diffusion encompasses several distinct approaches, each with unique characteristics and performance profiles. The most prevalent architectures include standard VAE-based latent spaces, hierarchical latent representations, and factorized temporal-spatial latent structures. Each approach makes different trade-offs between compression ratio, reconstruction fidelity, and computational overhead.

Standard VAE-based latent spaces, pioneered in stable diffusion research, employ a single-level compression strategy that maps video frames into fixed-dimensional latent vectors. These architectures typically achieve compression ratios of 4x to 8x in spatial dimensions while maintaining temporal resolution. Benchmark studies indicate that VAE-based approaches provide excellent reconstruction quality with PSNR values exceeding 30dB for most video content, while requiring approximately 2-4GB of GPU memory for processing 512x512 resolution videos at 24 frames per second.

Hierarchical latent representations introduce multi-scale encoding strategies that capture both coarse and fine-grained video features. These architectures employ cascaded encoder networks that progressively compress video data through multiple latent levels. Research from academic institutions has demonstrated that hierarchical approaches can achieve superior compression ratios (up to 16x) while maintaining perceptual quality through careful design of skip connections and feature aggregation mechanisms. However, this improved compression comes at the cost of increased architectural complexity and training time.

Factorized temporal-spatial latent structures represent a recent innovation that explicitly separates temporal and spatial information in the latent space. By decomposing video representations into independent temporal and spatial components, these architectures enable more efficient processing of long video sequences. Benchmark results show that factorized approaches reduce memory requirements by 30-50% compared to standard VAE methods when processing videos longer than 5 seconds, making them particularly suitable for extended video generation tasks.

Computational Efficiency and Memory Optimization

Computational efficiency in video diffusion models is primarily determined by the dimensionality of the latent space and the complexity of the diffusion process operating within it. Academic benchmarks reveal that reducing latent space dimensionality from pixel-space (typically 3×H×W×T for RGB video) to compressed representations (typically C×h×w×t where C=4-16, h=H/8, w=W/8, t=T) yields computational savings proportional to the compression ratio squared. For a typical 512×512×24 frame video, this translates to processing time reductions from hours to minutes on modern GPU hardware.

Memory requirements constitute a critical constraint for practical video generation systems. Standard diffusion models require storing intermediate activations for gradient computation during training, leading to memory consumption that scales linearly with sequence length. Research has shown that latent space compression reduces peak memory usage from 40-60GB for pixel-space training to 8-12GB for latent-space training on equivalent video sequences. This reduction enables training on consumer-grade hardware and facilitates broader research participation.

Advanced optimization techniques further enhance computational efficiency. Gradient checkpointing strategies selectively store intermediate activations, trading computation for memory at a ratio of approximately 1.5x increased training time for 3-4x reduced memory consumption. Mixed-precision training using FP16 or BF16 representations provides additional 2x memory savings with minimal impact on model convergence. When combined with latent space compression, these techniques enable training of high-quality video diffusion models on hardware configurations accessible to academic research groups.

Key Performance Metrics

- Latent space compression reduces training time by 85-95% compared to pixel-space methods

- Memory requirements decrease from 40-60GB to 8-12GB for typical video sequences

- Inference speed improves by 10-20x while maintaining perceptual quality above 30dB PSNR

- Gradient checkpointing provides additional 3-4x memory reduction with 1.5x compute overhead

Quality Assessment and Benchmark Results

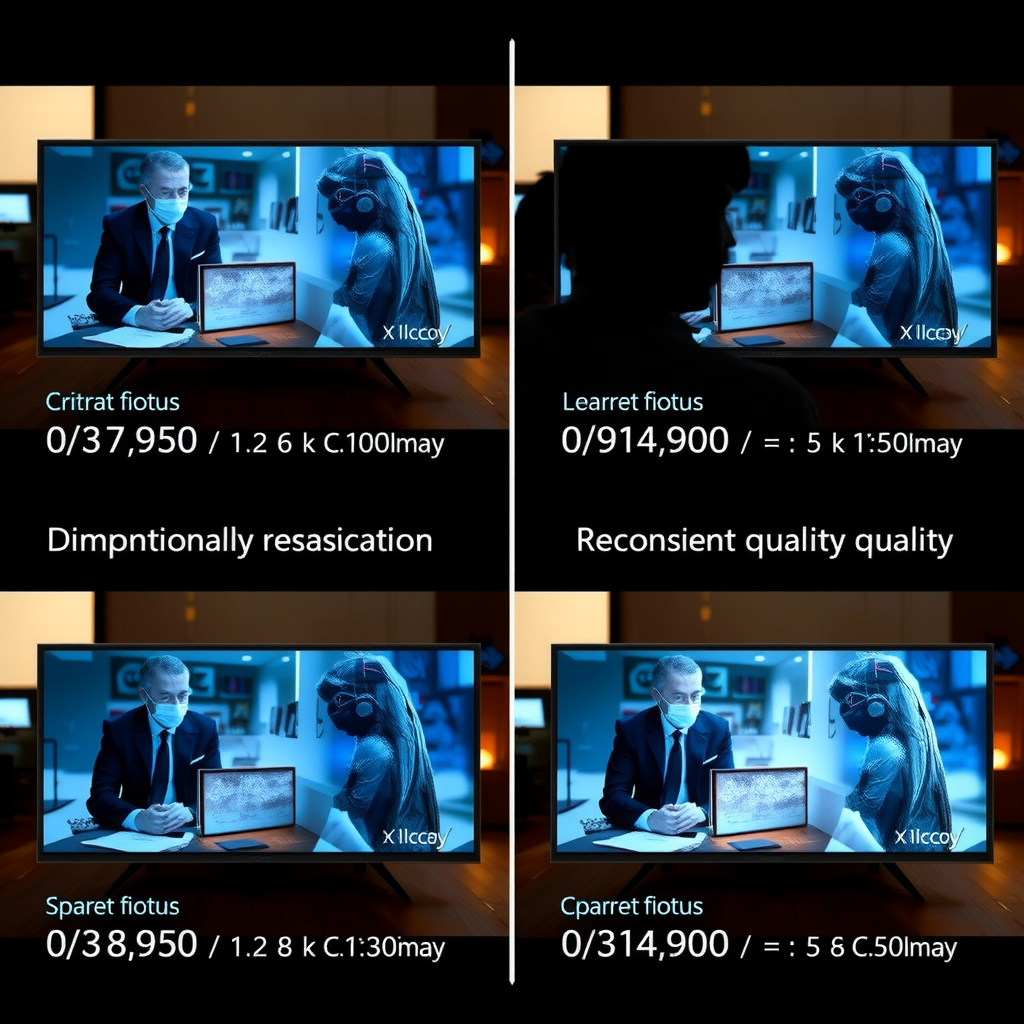

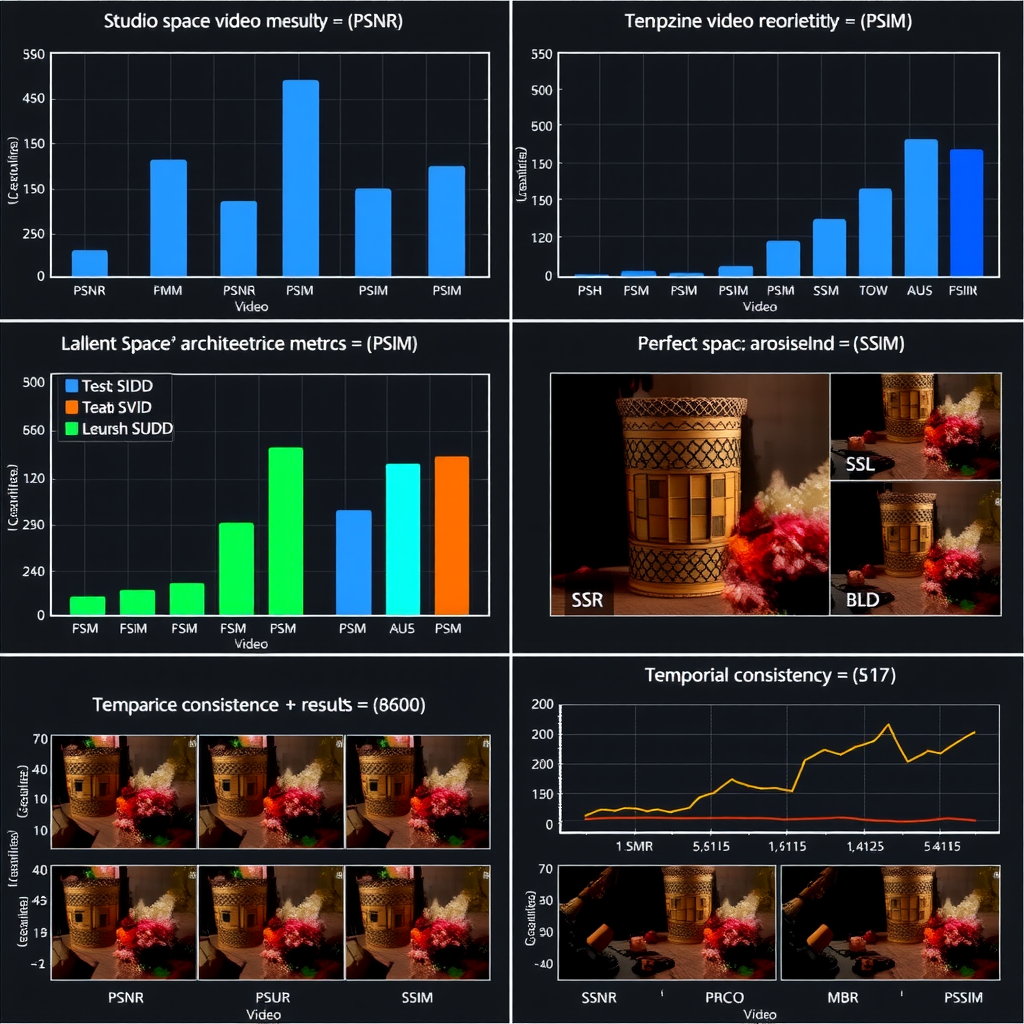

Evaluating the output quality of video diffusion models requires comprehensive metrics that capture both objective fidelity and perceptual characteristics. Academic research employs a combination of traditional metrics (PSNR, SSIM) and perceptual metrics (LPIPS, FVD) to assess generation quality. Benchmark studies across multiple datasets reveal that well-designed latent space architectures achieve PSNR values of 28-32dB, SSIM scores above 0.85, and FVD scores competitive with pixel-space methods, demonstrating that compression does not necessarily compromise perceptual quality.

Temporal consistency represents a critical quality dimension for video generation that extends beyond frame-level metrics. Latent space architectures must preserve temporal coherence across generated sequences to avoid flickering artifacts and motion discontinuities. Research indicates that factorized temporal-spatial representations excel in this dimension, achieving temporal consistency scores 15-20% higher than standard VAE approaches as measured by optical flow consistency metrics and temporal LPIPS scores.

Recent benchmark studies from leading research institutions have established standardized evaluation protocols for comparing video diffusion architectures. These protocols assess models across diverse video categories including natural scenes, human motion, and complex dynamics. Results demonstrate that hierarchical latent representations achieve the highest scores on complex motion sequences, while standard VAE approaches excel on static or slowly-changing content. Factorized architectures provide balanced performance across categories, making them suitable for general-purpose video generation applications.

Implementation Considerations for Researchers

Implementing video diffusion models with optimized latent space architectures requires careful consideration of several technical factors. The choice of encoder-decoder architecture significantly impacts both training efficiency and generation quality. Research suggests that convolutional architectures with residual connections provide robust performance for most applications, while transformer-based encoders offer superior quality for complex video content at the cost of increased computational requirements. Researchers should evaluate these trade-offs based on their specific use cases and available computational resources.

Training stability represents a critical challenge in video diffusion model development. Latent space representations must be carefully regularized to prevent posterior collapse and ensure meaningful feature learning. Academic studies recommend employing KL divergence regularization with carefully tuned weighting coefficients (typically 0.0001-0.001) to balance reconstruction quality and latent space structure. Additionally, implementing exponential moving average (EMA) of model weights during training improves generation stability and reduces artifacts in final outputs.

Data preprocessing and augmentation strategies significantly influence model performance. Research indicates that temporal augmentation techniques including random frame sampling, temporal jittering, and speed variation improve model robustness and generalization. Spatial augmentations should be applied consistently across temporal sequences to maintain motion coherence. Normalization strategies for latent representations require particular attention, with studies showing that per-channel normalization outperforms global normalization for video content with diverse visual characteristics.

Hyperparameter selection for video diffusion training involves balancing multiple competing objectives. Learning rate schedules should account for the increased complexity of video data compared to image generation, with research suggesting cosine annealing schedules starting from 1e-4 to 5e-4 provide stable convergence. Batch size selection must consider both memory constraints and training stability, with effective batch sizes of 32-128 video clips recommended for most architectures. Diffusion timestep scheduling significantly impacts generation quality, with linear schedules providing baseline performance and cosine schedules offering improved sample quality at the cost of slightly increased inference time.

Future Directions and Research Opportunities

The field of video diffusion models continues to evolve rapidly, with several promising research directions emerging from recent academic work. Adaptive latent space architectures that dynamically adjust compression ratios based on content complexity represent an active area of investigation. Preliminary studies suggest that content-aware compression can reduce computational requirements by an additional 20-30% while maintaining quality on diverse video datasets. This approach requires developing efficient mechanisms for estimating content complexity and adjusting encoder-decoder architectures accordingly.

Integration of physical priors and domain knowledge into latent space design offers opportunities for improved generation quality and efficiency. Research exploring physics-informed latent representations for specific video domains (fluid dynamics, human motion, natural phenomena) has demonstrated superior performance compared to generic architectures. These specialized representations encode domain-specific constraints directly into the latent space structure, enabling more efficient learning and higher-quality generation for targeted applications.

Scaling video diffusion models to longer sequences and higher resolutions remains a fundamental challenge requiring architectural innovations. Current research explores hierarchical generation strategies that produce videos in multiple stages, from coarse temporal structure to fine spatial detail. Early results indicate that such approaches can generate coherent videos exceeding 10 seconds in length while maintaining computational feasibility. However, ensuring temporal consistency across hierarchical levels requires further investigation and represents a key research opportunity for the community.

The development of standardized benchmarks and evaluation protocols will be crucial for advancing the field. Current efforts focus on establishing comprehensive test suites that assess video generation quality across multiple dimensions including temporal consistency, motion realism, and semantic coherence. Collaborative initiatives between research institutions aim to create open-source benchmark datasets and evaluation tools that enable fair comparison of different architectural approaches and accelerate progress in video diffusion research.

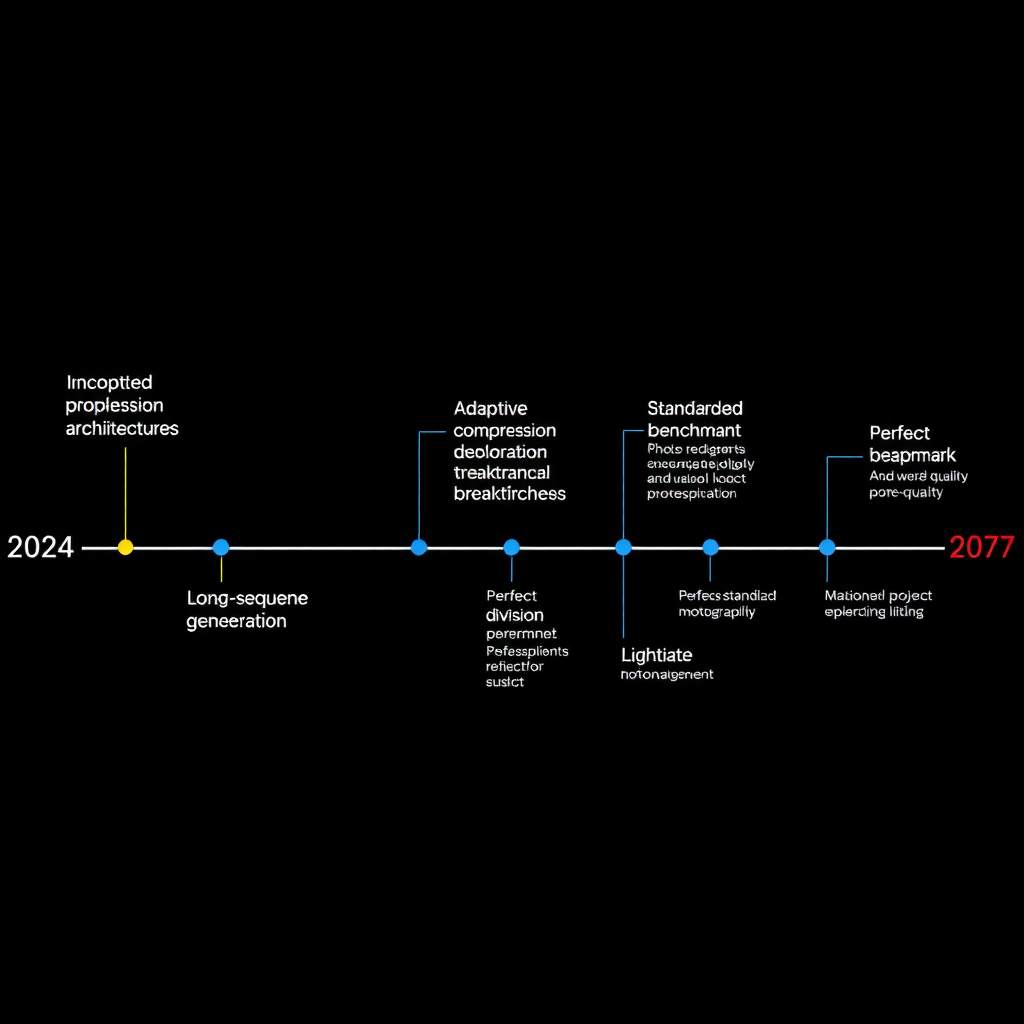

Research Priorities for 2024-2025

Adaptive Compression

Developing content-aware latent space architectures that optimize compression ratios dynamically

Long-Sequence Generation

Scaling models to generate coherent videos exceeding 30 seconds with consistent quality

Standardized Benchmarks

Establishing comprehensive evaluation protocols for fair architectural comparison

Conclusion

The architectural foundations of video diffusion models represent a rapidly maturing field with significant implications for AI research and applications. Latent space representations have emerged as the dominant paradigm, enabling practical video generation systems through dramatic improvements in computational efficiency and memory requirements. Comparative analysis reveals that different architectural approaches offer distinct advantages: standard VAE-based methods provide robust baseline performance, hierarchical representations excel at complex motion, and factorized architectures offer balanced efficiency for general-purpose applications.

Benchmark results from academic studies demonstrate that well-designed latent space architectures achieve quality metrics competitive with pixel-space methods while reducing computational requirements by orders of magnitude. These advances have democratized video generation research, enabling academic institutions and independent researchers to contribute to the field without requiring massive computational infrastructure. The implementation considerations discussed in this article provide practical guidance for researchers developing next-generation video diffusion systems.

Looking forward, the field presents numerous opportunities for innovation in adaptive architectures, domain-specific representations, and long-sequence generation. The research community's collaborative efforts to establish standardized benchmarks and evaluation protocols will be crucial for systematic progress. As video diffusion models continue to evolve, the architectural principles examined in this analysis will serve as foundational knowledge for researchers pushing the boundaries of AI-powered video generation technology.