Understanding Motion Dynamics in AI Video Generation Systems

An in-depth exploration of how artificial intelligence learns to replicate realistic movement patterns in generated video sequences, examining the critical relationship between training data characteristics and motion quality outcomes.

The field of AI-driven video generation has witnessed remarkable advances in recent years, yet one of the most persistent challenges remains the synthesis of realistic motion. While stable diffusion models have demonstrated exceptional capabilities in generating static images, translating these techniques to temporal sequences introduces complex dynamics that require sophisticated understanding of movement physics, object interactions, and natural motion patterns.

This comprehensive analysis examines the fundamental mechanisms through which AI video generation systems learn and replicate motion, exploring the intricate relationship between training data characteristics and the quality of generated movement sequences. We delve into physics-informed approaches that enhance motion realism and present detailed case studies from recent academic publications that have pushed the boundaries of what's possible in synthetic video generation.

The Foundation: Training Data and Motion Learning

At the core of any AI video generation system lies its training dataset, which serves as the foundation for learning motion patterns. The characteristics of this data fundamentally determine the system's ability to generate realistic movement. Modern video generation models typically train on millions of video clips, extracting temporal features that encode how objects move, deform, and interact across frames.

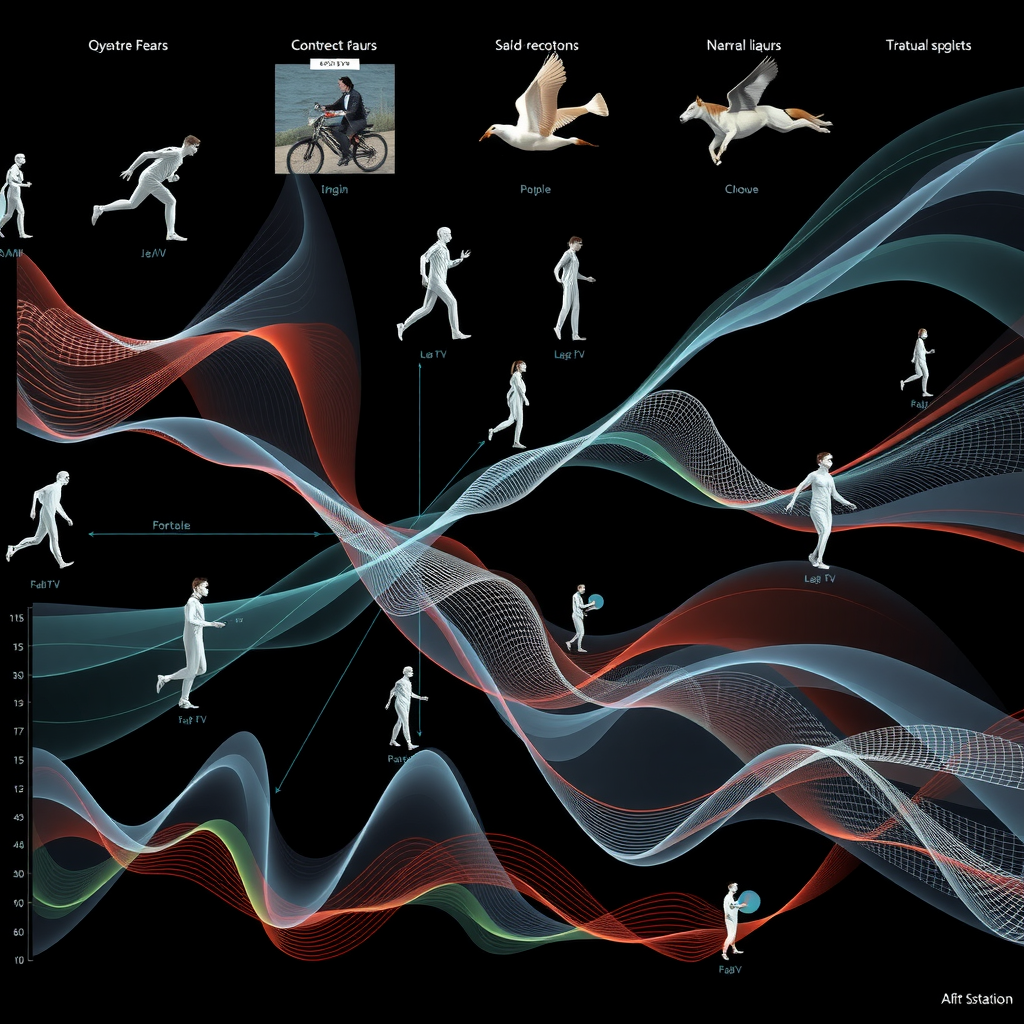

The diversity and quality of training data directly impact motion realism. Datasets must encompass a wide range of motion types: rigid body movements, deformable object dynamics, fluid simulations, biological motion patterns, and complex multi-object interactions. Research has shown that models trained on datasets with limited motion diversity tend to produce repetitive or physically implausible movements, particularly when generating scenarios outside their training distribution.

Temporal resolution plays a crucial role in motion learning. Higher frame rates in training data enable models to capture subtle motion nuances and smooth transitions between frames. However, this comes at the cost of increased computational requirements and storage demands. Recent studies have explored optimal frame rate sampling strategies that balance motion detail capture with practical training constraints, finding that adaptive sampling based on motion complexity yields superior results compared to uniform sampling approaches.

Motion annotation and labeling strategies significantly influence learning outcomes. While some approaches rely on unsupervised learning from raw video data, others incorporate explicit motion annotations such as optical flow fields, depth maps, or semantic segmentation masks. These additional signals provide valuable supervision that helps models understand the underlying structure of motion, leading to more coherent and physically plausible generated sequences.

Physics-Informed Approaches to Video Synthesis

Traditional data-driven approaches to video generation often struggle with physical consistency, producing visually appealing but physically implausible motion sequences. Physics-informed neural networks represent a paradigm shift, incorporating fundamental physical laws directly into the learning process. These approaches constrain the model's output space to solutions that respect conservation laws, kinematic constraints, and dynamic principles.

One prominent physics-informed approach involves embedding differential equations that govern motion directly into the network architecture. For instance, models generating fluid dynamics can incorporate Navier-Stokes equations as soft constraints during training, penalizing solutions that violate fundamental fluid mechanics principles. This integration ensures that generated fluid motion exhibits realistic properties such as vorticity conservation, pressure gradients, and viscous effects.

Rigid body dynamics present another domain where physics-informed approaches excel. By incorporating Newton's laws of motion and rotational dynamics, models can generate realistic object trajectories that account for gravity, momentum, and collision responses. Recent work has demonstrated that even simple physics priors, such as enforcing momentum conservation, dramatically improve the realism of generated motion compared to purely data-driven baselines.

Articulated motion, such as human body movement, benefits significantly from skeletal constraints and joint angle limitations. Physics-informed models for human motion generation incorporate biomechanical constraints that prevent anatomically impossible poses and movements. These constraints can be implemented as hard boundaries in the latent space or as additional loss terms that penalize physically implausible configurations during training.

Temporal Coherence and Motion Consistency

Maintaining temporal coherence across generated video frames represents one of the most significant challenges in AI video synthesis. Unlike static image generation, video models must ensure that consecutive frames exhibit smooth transitions and consistent motion trajectories. Temporal inconsistencies manifest as flickering artifacts, sudden object appearance changes, or discontinuous motion paths that immediately reveal the synthetic nature of generated content.

Recurrent architectures and temporal attention mechanisms have emerged as effective solutions for maintaining coherence. These approaches enable the model to condition each frame's generation on previous frames, creating dependencies that enforce consistency. Long Short-Term Memory networks and Transformer-based temporal attention layers can capture both short-term motion dynamics and long-range temporal dependencies, ensuring that generated sequences maintain coherent narratives across extended durations.

Optical flow estimation plays a crucial role in enforcing motion consistency. By explicitly modeling pixel-level motion between frames, models can ensure that object movements follow smooth, continuous trajectories. Some approaches incorporate optical flow as an intermediate representation, first generating flow fields that describe motion, then warping previous frames according to these flows to produce subsequent frames. This two-stage process naturally enforces temporal coherence while providing interpretable motion representations.

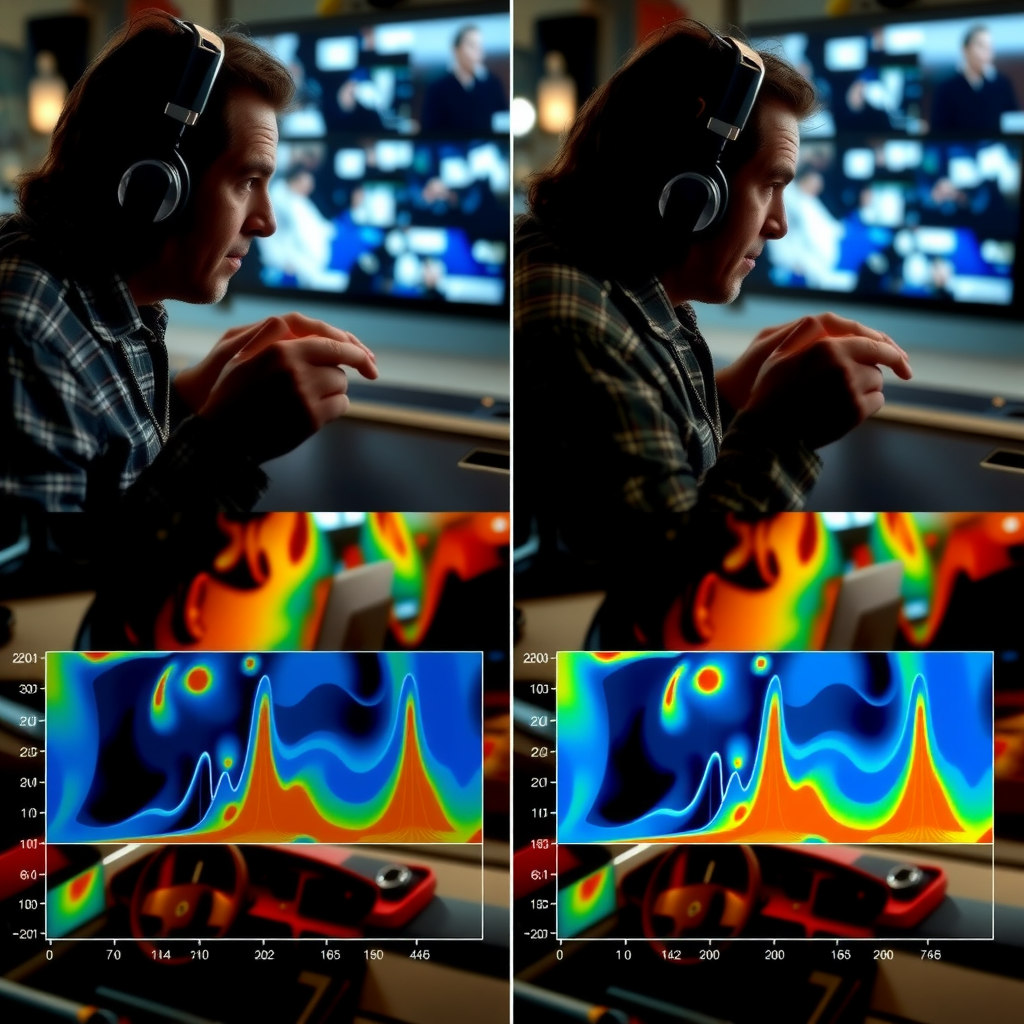

Perceptual loss functions that operate in the temporal domain have proven effective for improving motion quality. Rather than comparing individual frames independently, these losses evaluate sequences of frames, penalizing temporal inconsistencies and rewarding smooth motion patterns. Temporal perceptual losses can be computed using pre-trained video understanding networks that have learned to recognize natural motion patterns, providing a learned metric for motion quality that correlates well with human perception.

Case Studies from Recent Academic Research

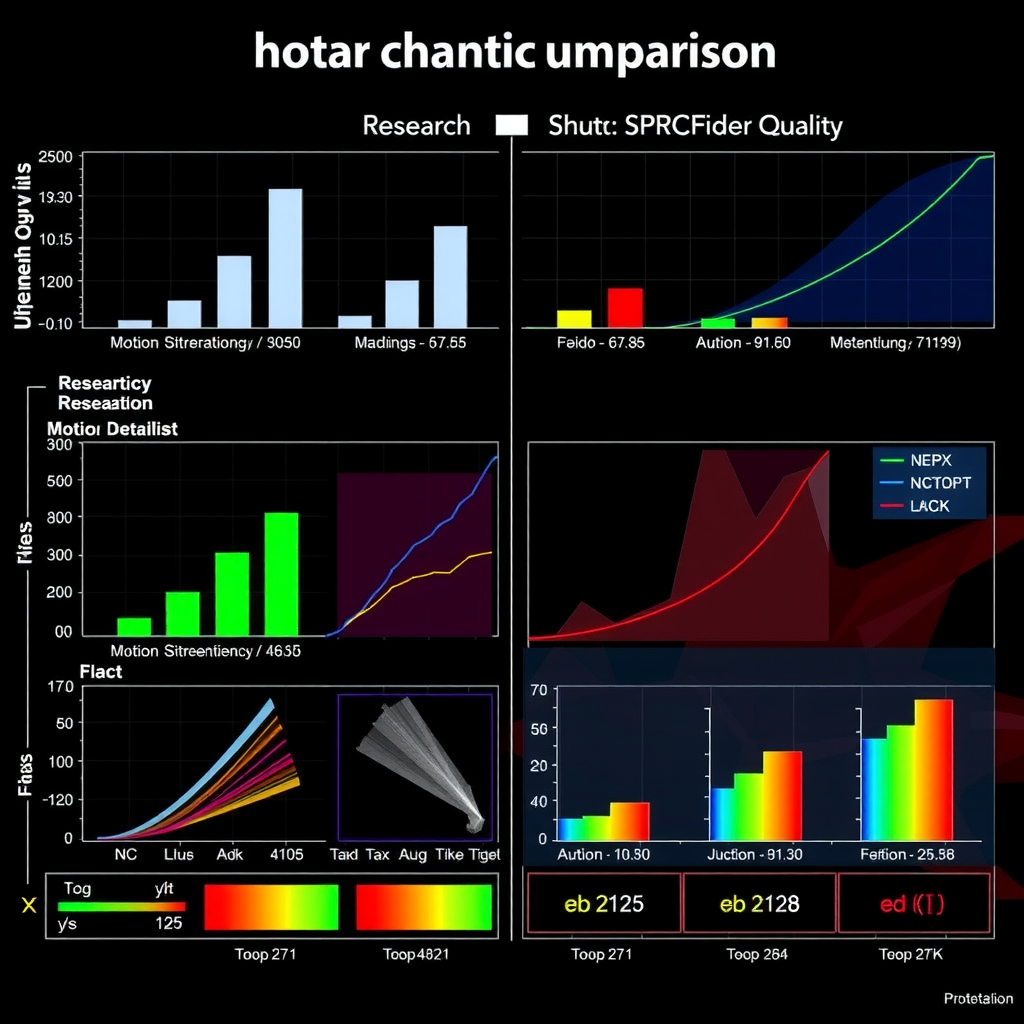

Recent academic publications have demonstrated significant advances in motion realism through innovative architectural designs and training strategies. One notable study from a leading computer vision research group introduced a hierarchical motion decomposition approach that separates global camera motion from local object movements. This decomposition enables the model to handle complex scenes with multiple moving objects while maintaining consistent camera trajectories, addressing a common failure mode in earlier video generation systems.

Another groundbreaking work explored the use of adversarial training specifically designed for temporal dynamics. The researchers developed a temporal discriminator that evaluates motion realism across multiple timescales, from frame-to-frame transitions to long-term trajectory consistency. This multi-scale temporal adversarial training significantly improved motion quality, particularly for complex scenarios involving rapid movements or intricate object interactions.

A particularly influential study investigated the role of motion priors learned from large-scale video datasets. The researchers demonstrated that pre-training on diverse motion patterns, even from unrelated domains, significantly improves a model's ability to generate realistic movement in target applications. This transfer learning approach suggests that motion understanding is a generalizable skill that can be leveraged across different video generation tasks.

Recent work on controllable motion generation has introduced methods for explicit user control over movement characteristics. These systems allow users to specify motion trajectories, speeds, or styles through intuitive interfaces, while the model handles the complex task of generating photorealistic video content that follows these specifications. Such controllability is crucial for practical applications in content creation, animation, and visual effects production.

Evaluation Metrics and Quality Assessment

Quantifying motion quality in generated videos presents unique challenges that extend beyond traditional image quality metrics. While metrics like Peak Signal-to-Noise Ratio and Structural Similarity Index work well for static images, they fail to capture the temporal dynamics that define motion realism. The research community has developed specialized metrics that specifically evaluate motion characteristics in generated video sequences.

Frechet Video Distance has emerged as a standard metric for assessing overall video quality, including motion realism. This metric compares the distribution of features extracted from generated videos against those from real videos using a pre-trained video understanding network. Lower FVD scores indicate that generated videos more closely match the statistical properties of real footage, including motion patterns, temporal dynamics, and scene composition.

Motion-specific metrics focus on evaluating the physical plausibility and smoothness of generated movement. Optical flow consistency measures how well motion vectors align between consecutive frames, detecting temporal discontinuities and unnatural jumps. Acceleration profiles can be analyzed to identify physically implausible sudden changes in velocity that would not occur in real-world scenarios. These physics-based metrics provide objective assessments of motion realism that complement perceptual quality measures.

Human perceptual studies remain the gold standard for evaluating motion realism, as they directly measure whether generated videos appear natural to human observers. These studies typically involve showing participants pairs of videos and asking them to identify which is real or rate the realism of motion on standardized scales. While time-consuming and expensive, perceptual studies provide invaluable ground truth for validating computational metrics and understanding what aspects of motion quality matter most to human viewers.

Future Directions and Open Challenges

Despite remarkable progress in AI video generation, significant challenges remain in achieving truly photorealistic motion across all scenarios. Long-duration video generation continues to pose difficulties, as maintaining coherent motion and consistent object identities over extended sequences requires sophisticated memory mechanisms and planning capabilities that current models lack. Future research must address how to generate videos spanning minutes or hours while preserving narrative coherence and motion consistency.

Complex multi-object interactions represent another frontier for improvement. While current systems handle simple scenarios reasonably well, generating realistic interactions between multiple moving objects, particularly with contact dynamics and physical exchanges, remains challenging. Incorporating more sophisticated physics simulation engines into the generation process may provide a path forward, though balancing computational efficiency with physical accuracy presents ongoing trade-offs.

The integration of stable diffusion techniques with video generation holds particular promise for improving motion quality. By leveraging the powerful image generation capabilities of diffusion models and extending them to the temporal domain with careful attention to motion dynamics, researchers are developing systems that combine photorealistic appearance with physically plausible movement. This convergence of image and video generation technologies represents an exciting direction for future development.

As AI video generation systems continue to evolve, understanding and improving motion realism remains central to their success. The approaches discussed in this article—from physics-informed architectures to sophisticated temporal coherence mechanisms—provide a foundation for ongoing advances. Through continued research, collaboration, and open sharing of techniques and datasets, the research community is steadily progressing toward the goal of generating video content that is indistinguishable from reality in both appearance and motion.